Evaluating alternatives to LLMrefs? This guide compares seven credible options across engine coverage, sampling cadence, citation transparency, collaboration features, and starting price.

If you’re researching AI search visibility tracking tools, use the quick chart to match each platform to your needs—whether you want plug-and-play monitoring, cross-engine reporting, or workflows that turn insights into content fixes.

Quick Picks (TL;DR)

- Best overall (agencies & content teams): Rankability’s AI Analyzer.

- Best cross-engine reporting: Peec AI.

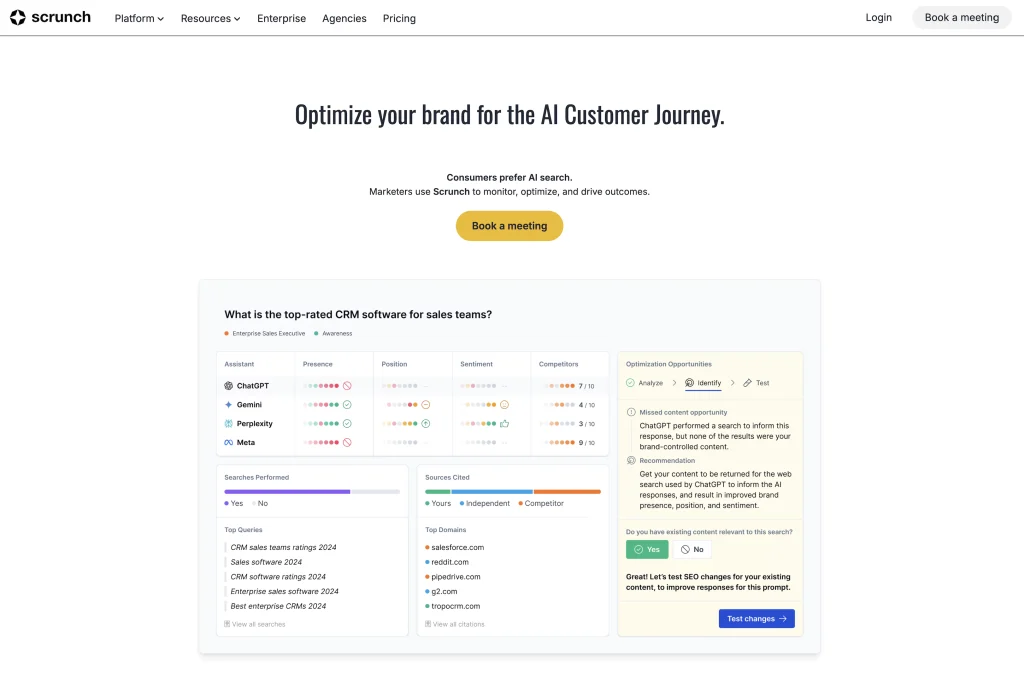

- Best enterprise optimization stack: Scrunch AI.

- Best mid-market analytics depth: AthenaHQ.

- Best budget starter: Rankscale AI.

- Best for SE Ranking users: SE Ranking’s AI Visibility Tracker.

- Best plug-and-play monitoring: Otterly AI.

What We Looked For (and why it matters)

- Engine coverage. ChatGPT, Perplexity, Google (AI Overviews/AI Mode), Gemini, Claude, Copilot, etc. If your buyers consult it, you should measure it.

- Sampling cadence & transparency. How often prompts re-run and whether citations/sources are stored for auditability.

- Actionability. Not just charts—clear gaps to fix with content/tech changes.

- Collaboration & scale. Seats/roles, exports, APIs, and history for trendlines.

- Price-to-value. Cost scales with cadence and engines; over-tracking can burn credits quickly.

Quick Comparison Snapshot

Pricing changes often, so verify on vendor pages before purchase.

| Tool | Best for | Starting price* | Engines/coverage (examples) | Notes |

|---|---|---|---|---|

| Rankability AI Analyzer | Agencies & content teams that want monitoring → fixes | from $124/mo billed annually (suite) | ChatGPT, Gemini, Claude, Perplexity, Grok, DeepSeek, Copilot; Google AI Overviews & AI Mode. | Analyzer ties into Rankability’s Optimizer & Monitoring. |

| Peec AI | Cross-engine reporting & exports | €89/mo (Starter) | ChatGPT, Perplexity, AIO; add-ons for Gemini, AI Mode, Claude, DeepSeek, Llama, Grok. | Unlimited seats on all tiers; yearly discount available. |

| Scrunch AI | Enterprise GEO & optimization workflows | $300/mo (Starter) | ChatGPT, Perplexity, Claude, Meta AI, Gemini, Google AI Overviews. | Annual plan shows 2 months free; AXP available for AI-first site experiences. |

| AthenaHQ | Mid-market teams needing analytics depth | $295+/mo (Starter) | ChatGPT, Perplexity, Google AI Overviews & AI Mode, Gemini, Claude, Copilot, Grok. | Credit-based; unlimited seats with RBAC noted on plan. |

| Rankscale AI | Budget starter with credit-based runs | $20/mo (Essentials) | ChatGPT, Claude, Perplexity, Google AI Overviews (and more). | Flexible, credit-based pricing; indie velocity. |

| SE Ranking – AI Visibility | Teams already using an SEO suite | $89/mo (suite plan incl. AI Results Tracker prompts) | AI Overviews, AI Mode, ChatGPT; Perplexity & Gemini listed as “coming soon.” | Prompt allowances vary by tier; lives inside SE Ranking platform. |

| Otterly AI | Plug-and-play monitoring | $29/mo (Lite) | ChatGPT, AIO, Perplexity, Copilot; add-ons for Gemini & AI Mode. | Add-ons priced per plan; free trial available. |

*Starting prices change often – verify current pricing before purchase.

The Alternatives

1. Rankability’s AI Analyzer (Best overall)

If you want monitoring that connects to fixes, Analyzer stands out: you can test branded & commercial prompts across top AI assistants, capture citations/answers, then hand off issues to Rankability’s Content Optimizer to adjust pages and briefs, closing the loop from insight → action.

Coverage spans ChatGPT, Gemini, Claude, Perplexity, Grok, DeepSeek, and Microsoft Copilot, with an emphasis on competitive mapping and visibility over time.

Good fit if: You run multi-client workflows and need reports that translate into content work, not just slides.

2. Peec AI (Best cross-engine reporting)

Peec focuses on brand performance across AI platforms with clear dashboards for rankings, sources/citations, prompts, and competitor comparisons.

It’s built for marketing teams needing multi-engine SOV and easy exports; third-party reviews frequently call it a strong baseline for accuracy and breadth.

Good fit if: You want fast cross-engine reporting and consistent sampling without adopting a larger SEO suite.

3. Scrunch AI (Best enterprise optimization stack)

Scrunch frames GEO as monitor → optimize. Beyond tracking how your brand appears vs. competitors, it’s designed to identify opportunities and support content/experience changes.

For enterprise teams, that optimization-oriented stack (plus SOV views and benchmarking) is compelling, though it can be overkill for smaller teams.

Good fit if: You need enterprise-grade Share of Voice and structured optimization workflows.

4. AthenaHQ (Best mid-market analytics depth)

AthenaHQ positions itself as a full GEO platform with analytical features. A unique angle is QVEM, a query volume estimation model aimed at approximating demand in AI search, useful when traditional keyword volumes don’t reflect AI behavior.

It’s a thoughtful choice for teams that want deeper modeling, not just monitoring.

Good fit if: You want analysis & modeling (e.g., prompt demand estimation) alongside visibility tracking.

5. Rankscale AI (Best budget starter)

Rankscale offers daily or hourly prompt simulations across major assistants and tracks citations, domains, and relative ranking of brands in responses.

It’s a leaner option that still checks the core boxes and moves quickly on feature updates—ideal if you’re price-sensitive but want real monitoring.

Good fit if: You’re starting GEO programs and need signal without heavy cost.

6. SE Ranking’s AI Visibility Tracker (Best for SE Ranking users)

If your team already relies on SE Ranking, its AI Visibility/AI Mode features make adoption easy: track mentions (including unlinked) in AI answers, compare against competitors, and follow visibility changes over time, all inside the same SEO suite you use for keywords, technical audits, etc.

Good fit if: You prefer suite consolidation and lighter onboarding for the team.

7. Otterly AI (Best plug-and-play monitoring)

Otterly markets a GEO starter experience: quick setup, ChatGPT rank tracking, and monitoring of brand mentions and website citations to keep your team in “action mode.”

Community chatter often frames it as a simple, pragmatic option—great to get moving, even if it’s lighter on advanced analytics.

Good fit if: You want simple dashboards and momentum without a large platform.

LLMrefs vs. These Alternatives: When to Switch

LLMrefs is a recognizable name with broad AI search engine coverage and an aggregated LLMrefs Score KPI. If you’re satisfied with monitoring but want stronger optimization workflows, richer analytics, or suite consolidation, consider switching.

- Need fix workflows + content handoffs? Rankability or Scrunch.

- Want cross-engine trendlines and exports fast? Peec AI.

- Prefer analytics/models (e.g., demand estimation)? AthenaHQ.

- Price-sensitive, want daily prompts? Rankscale AI.

- Already live in an SEO suite? SE Ranking.

- Starter, plug-and-play? Otterly AI.

Migration Checklist (LLMrefs → New Platform)

- Export what you track today. Prompts, brand terms, competitors, historical trendlines (including any “score” KPIs).

- Normalize your taxonomy. Brand vs non-brand, product/category prompts, funnel stages.

- Replicate cadence. Match daily/weekly schedules and add variance control (number of re-runs).

- Stand up dashboards. Share of Voice, citations/sources, “no-citation” gaps, competitor comparisons.

- Wire to content ops. Connect monitoring to page briefs, updates, and QA checks—ideally in one stack.

- Backfill history. Import CSVs where supported; annotate methodology changes so trendlines remain meaningful.

Buying Guide (how to choose in 2025)

- Match engines to your funnel. E.g., research-heavy journeys → ChatGPT/Perplexity; retail/evergreen → Google AI Overviews/AI Mode.

- Demand citation transparency. You need links/sources to prioritize fixes.

- Plan seats/exports early. Agency teams need roles, API access, and report automation.

- Model cost vs. cadence. More runs ≠ better insights; right frequency keeps budgets sane.

FAQs

Is LLMrefs still worth it in 2025?

Yes—especially if your team wants an aggregated LLMrefs Score across top engines. If you outgrow monitoring and want deeper optimization or consolidation with other SEO tasks, consider the alternatives above.

How do Rankability’s Analyzer and LLMrefs differ?

Both track brand visibility across multiple assistants. Analyzer’s advantage is the built-in content workflow (analyze → optimize within one ecosystem), which shortens time-to-fix for issues you uncover.

Which engines matter most?

For many brands: ChatGPT and Perplexity for research/inspiration; Google’s AI Overviews/AI Mode for SERP-adjacent exposure. Prioritize where your audience actually evaluates options.

How often should we sample prompts?

Weekly is a safe baseline; move to daily for critical prompts or volatile categories. Balance cost with the need for trend sensitivity.

How do we prove ROI?

Track branded/unbranded mentions & citations → content changes → movement in SOV and citations → assisted conversions/brand search lift. Most platforms provide the visibility metrics; you connect them to your analytics stack.

Methodology

We selected six alternatives from a current industry roundup (September 2025), then verified positioning, feature claims, and use-cases against vendor sites and third-party write-ups (Search Engine Land, reviews/posts). Always check current pricing/coverage before purchase; these tools evolve quickly.

Free GEO Checklist – Download the complete 97-point checklist to optimize your brand for AI-powered search engines like ChatGPT, Gemini, and Perplexity.